The real business problem behind AI in production

In recent years, the adoption of artificial intelligence has grown rapidly inside companies. Data teams are able to build promising models, proofs of concept get approved, and expectations for business impact rise. However, when it’s time to bring those models into production, obstacles appear that many organizations weren’t prepared to face.

It’s common to see scenarios where a model performs well in a controlled environment but loses accuracy as soon as it starts receiving real-world data. In other cases, there’s no clear visibility into which model version is running, what data was used for training, or who is responsible for maintaining it. Over time, small manual fixes pile up, operational risk increases, and the business’s trust in AI declines.

This set of problems isn’t only technical. It directly impacts cost, risk, compliance, and decision-making indicators. AI initiatives that don’t scale, or that fail silently in production, end up consuming budget without delivering consistent returns. That’s exactly where the need for MLOps comes in.

What is MLOps?

MLOps, short for Machine Learning Operations, is an approach that combines processes, practices, and tools to manage the entire lifecycle of machine learning models in production. The goal is not just to deploy a model, but to ensure it keeps working properly over time—even as data, context, and business rules change.

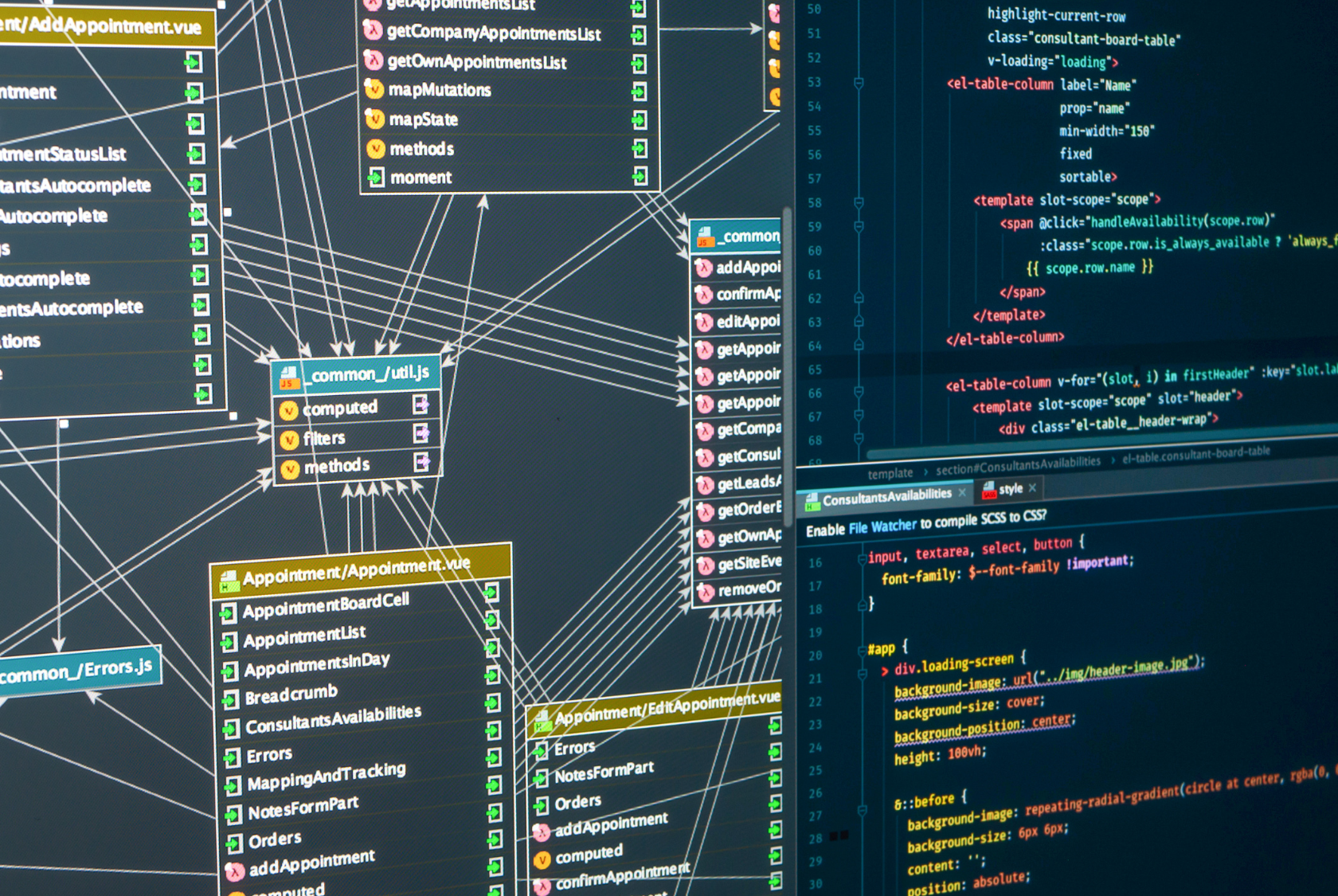

In practice, MLOps builds a bridge between data science, software engineering, and operations. It establishes standards for training, versioning, validation, deployment, monitoring, and retraining. More than technology, it’s a way of working that requires persistence, cross-team collaboration, and a long-term view.

While data science focuses on finding strong models and engineering ensures stable systems, MLOps makes sure these two worlds connect in a structured, continuous way.

At its core, MLOps ensures:

- Reproducibility of models and data

- Controlled and auditable changes

- Continuous performance monitoring

- Risk mitigation over time

It is not just a technical layer, but an operational mindset that connects data science, engineering, and business teams.

Why MLOps is not just “DevOps for AI”

Although MLOps inherits DevOps concepts like automation and continuous integration, it deals with additional challenges. In traditional applications, software behavior changes only when the code changes. In machine learning, system performance can shift even if the code stays the same—simply because the data changed.

On top of that, models learn statistical patterns from the past. When real-world behavior changes, those patterns stop being valid. This phenomenon, known as model drift, can compromise critical decisions without anyone noticing right away.

Another fundamental point is governance. Models can influence pricing, credit, recommendations, or operational decisions. That brings direct implications for privacy, explainability, and regulatory compliance. MLOps exists precisely to handle this added complexity in a structured way.

Key differences include:

- Data-driven behavior instead of rule-based logic

- Silent failures caused by data or concept drift

- Higher regulatory and ethical risks

- Continuous retraining requirements

How the MLOps lifecycle works in practice

In a mature MLOps environment, a model’s lifecycle is treated as a continuous process—not a one-time event. It starts with data. Data must be collected, validated, and versioned so experiments can be reproduced and future decisions can be understood. Without data control, any attempt at governance becomes fragile.

Next, models are trained and evaluated systematically. Each experiment records metrics, parameters, and results, enabling objective comparisons and evidence-based choices. When a model is considered ready, it goes through a controlled deployment process—often gradual—to reduce risk.

After deployment, the most critical phase begins: monitoring. Technical metrics, business performance, and data behavior must be tracked continuously. When signs of degradation appear, the system should allow adjustments or retraining in an organized way, avoiding emergency, improvised interventions.

This cycle repeats continuously, creating an environment of constant and predictable improvement.

In practice, it includes:

- Data versioning and quality control

- Structured experimentation and evaluation

- Safe and gradual deployment strategies

- Continuous technical and business monitoring

- Automated or controlled retraining

This lifecycle creates stability, predictability, and long-term scalability.

Concrete benefits of MLOps for companies

Companies that adopt MLOps consistently can scale their AI initiatives with far more safety. Automation reduces rework and dependence on individual knowledge, while standardization makes audits and risk assessments easier. With clear processes, the time between a model improvement and its real application in the business decreases significantly.

Another major benefit is trust. When leaders know models are monitored, versioned, and auditable, AI stops being seen as an experiment and starts being treated as a critical part of operations. That strengthens decision-making and increases the organization’s digital maturity.

Companies that adopt MLOps effectively achieve:

- Scalable AI operations with reduced risk

- Lower operational costs over time

- Faster iteration cycles

- Stronger trust from business stakeholders

- Sustainable AI-driven decision-making

Common mistakes when ignoring MLOps

One of the most frequent mistakes is treating MLOps as simply adopting a specific tool. Without clear processes and well-defined responsibilities, even the best technology won’t solve the problem. Another common error is focusing only on the deployment moment, forgetting that the real challenge begins after the model goes live.

It’s also common to underestimate security and privacy, especially when sensitive data is involved. These oversights can cause significant legal and reputational damage. Finally, a lack of integration between data, engineering, and business teams often creates bottlenecks that prevent sustainable model evolution.

Typical pitfalls include:

- Treating MLOps as a tool instead of a process

- Ignoring post-deployment monitoring

- Underestimating security and privacy risks

- Operating in silos without shared ownership

These issues often surface only after AI becomes business-critical.

When it makes sense to seek specialized support

As AI becomes a central part of a company’s strategy, operational complexity grows. When there are multiple models in production, stricter compliance requirements, or direct dependency on automated decisions, specialized support can accelerate maturity and reduce risk.

A structured MLOps approach helps avoid improvised solutions, align expectations across teams, and build a solid foundation for future growth.

Specialized support becomes valuable when:

- AI directly impacts core business decisions

- Multiple models are running in production

- Regulatory or audit requirements increase

- Internal teams struggle with operational overhead

A structured MLOps approach accelerates maturity and reduces long-term risk.

Sources consulted

Wikipedia – MLOps: https://en.wikipedia.org/wiki/MLOps

Google Cloud – MLOps: Continuous Delivery and Automation Pipelines: https://cloud.google.com/architecture/mlops-continuous-delivery-and-automation-pipelines-in-machine-learning

Microsoft Learn – MLOps Architecture: https://learn.microsoft.com/en-us/azure/architecture/example-scenario/mlops/mlops-architecture

LakeFS – What is MLOps?: https://lakefs.io/mlops/